What if you could you could scan your food to get a complete picture of its calories, ingredients and allergens, all in just 3 seconds? Welcome to the future, folks. TellSpec, a revolutionary food scanning device, is on its way to making this possible.

It’s often difficult to know exactly what’s in your food, which can have serious implications for anyone with food allergies or other health issues. Nutritional labeling offers a limited amount of information, but it is hard to understand and is not always accurate or complete. For example, the FDA does not require chemicals like mercury to be included on labels, but its consumption can have severe health implications, like neurological damage and memory loss. Food scanning apps like ScanVert, Fooducate and ShopWell make food shopping easier and more transparent, but they would not be able to monitor how much mercury you are consuming.

Which is why Isabel Hoffmann launched Tellspec after her daughter developed food and chemical allergies that were so severe she had to take a year sick leave from school. With a background as a mathematician, computer scientist and software developer, Hoffmann knew there had to be a better way. And after a little digging, she found a Canadian manufacturer that makes affordable chip spectrometers that work in a hand-held device, and then she got to work.

How It Works

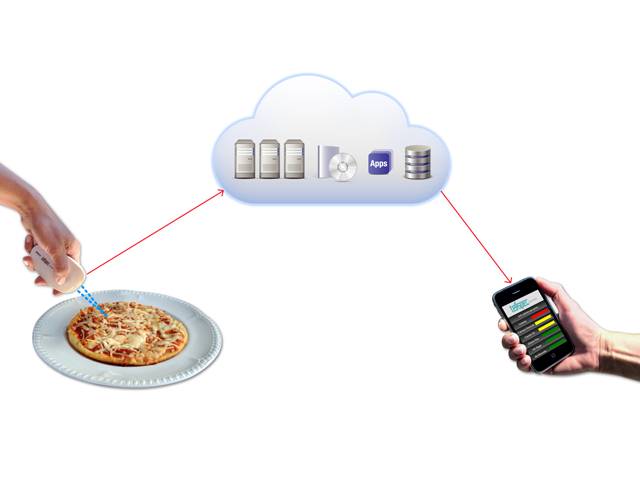

TellSpec has three components: a scanner, analysis engine algorithm and mobile app.

When someone scans a food item, the device beams a low powered laser at the food and then measures the reflected light with a chip spectrometer. As soon as the photons (particles of light) from the laser hit the molecules, they get excited and lower energy photons reflect back, with a different wave lengths depending upon which molecules they hit. The spectrometer then sorts and counts the wavelengths and transmits them through the smart phone via bluetooth to the cloud-based analysis engine.

This engine processes the photon wavelengths through the learning algorithm and is able to determine the composition of the ingredients in the food. It then feeds that composition information to the TellSpec mobile app where the user can then view and interact with things like calories, ingredients and chemicals, as well as target intake and a health survey.

Every food scan improves TellSpec’s learning algorithm. When someone scans an unknown food, for example, the algorithm analyzes and deconstructs its composition. It then cross-references this data with ingredient information already in the database to produce its nutrition and ingredient information, which is then incorporated into the algorithm for future scans.

Funding

In October 2013, the company launched an Indiegogo campaign to build working TellSpec prototypes and raised nearly 4 times its goal. Building on its initial traction, the team is now looking to raise a $5-6 million seed round by the end of March, which Hoffmann is confident about securing given the amount of investor interest they’ve already had.

The device will hit the market in August 2014 for $350-$400, but Hoffmann hopes demand will drive the price down to $50 in a few years, Mashable reports.

The TellSpec API

This company is smart. One of its Indiegogo perks is access to the TellSpec software development kit. For a $690 pledge, donors, mostly developers and technologists, get access to TellSpec’s food database, app source code and the API for TellSpec’s analysis engine. Developers can both test foods to grow TellSpec’s global database and leverage the API to build or enhance their own apps. One donor, a Taiwanese developer, scientist and dialysis specialist, even reached out about leveraging the technology to perform quick, non-invasive urine tests, Hoffman tells me.

Users

Contrary to Hoffmann’s assumption that the product would appeal most to food allergy sufferers, calorie counters turned out to be the largest category of early adopters. The number one user request so far has been calorie display – something that Hoffmann didn’t even want to include initially – followed by metabolic syndrome and weight loss. Chemicals are of the least concern to the general population right now, she says.

Next Steps

For now the device initially gives all users the same information about their food. If users select gluten information, for example, the algorithm will start including it in their food analysis. Developers working with the TellSpec team are pushing for the interface to allow users to fill out a profile at registration, which would contain a series of questions to allow for customization from the get-go. But Hoffmann worries that the potential food education component of the device – chemicals and toxins that users may not even be aware of – may be lost if users can customize the interface at registration.

Eventually, however, she hopes to leverage the user data to build a massive database of food information that, if GPS enabled, could help stave potential public health epidemics. Some day, for example, the data could show a sudden increase in gastrointestinal symptoms within a certain radius, and indicate that there may be a food or water safety issue at play.

“It’s about time we have a clean food revolution, [and] keep ourselves, our bodies healthy,” says Hoffmann. “The few of us that know about this need to do something. We are here to make everyone evolve.”